Archive for the ‘Uncategorized’ Category

BREAKTHROUGH

At times over the last year I thought this post would never happen.

Three years ago I wrote this sad elegy to the first (well, technically second) version of Holofunk. In that post I said,

Holofunk is going into a cocoon, and what emerges is going to be something quite different.

And the last two posts since then were both about “I’m making so much progress! Hooray for me! Everything will be working any minute now!”

Except… it wouldn’t. My last two years in a nutshell:

- 2016 – 2017: rewrote the graphics and UI in Unity, with no audio support at all.

- 2017 – 2018: rewrote the audio using C++ and the Windows 10 AudioGraph… and found it was impossible to get low latency. Tried with Windows WASAPI with the same results.

It was almost soul-killing, feeling like I had put in all those months of work and it still… sounded… wrong. Last fall I almost gave up.

BUT. My old friend and inspiration Beardyman came to Seattle for a gig, and I whined at him about my troubles, and he asked “Why don’t you use JUCE?” Why not, indeed? So I gave it one more shot.

And it worked. It supports the old ASIO audio standard which I was using three years ago. Turns out that’s the only way to get Holofunk to sound right on Windows. I had written it off because, as a non-Microsoft standard, it probably won’t work on HoloLens. BUT TOO BAD, because the longer I went on with Holofunk still not working, the worse my life became.

See, I have met quite a few amazing artists and humans through this project, and collaborating with them had become a joyous focus of my life. And I knew this just wasn’t that complicated technically. It was never a question of whether it was possible… but I didn’t expect it to take this many years. I was stunned recently to find my first description of the idea of Holofunk in an email from over a decade ago.

So the longer it took, the more I wondered whether I had it in me to get it working again. I actually pulled back from friends and from events, feeling like until I had Holofunk to share again, I wasn’t bringing much to the party. And moreover I had to use that time to hack.

It was rough, and my family and friends got sick of hearing me talk and talk and talk about how I was making progress, honest. Hell, I got sick of it myself. But eventually it started to come together again, and earlier this summer I realized the end was in sight. So this past summer has been a sprint, and now it’s finally fully revived and demoable once more. And I’ve got a nice open source looping sound library out of it too: https://github.com/RobJellinghaus/NowSound — no one else has yet pulled it but who knows what will happen next!

I’m working on demos and accompanying videos, so stay tuned (and not for long either — less than a month, tops). But it gives me frankly immeasurable relief and gratitude to say HOLOFUNK IS BACK. And the fun really starts three weeks from tonight when I demo to Alex Kipman… a goal that I’ve had for at least five years now!

Early 2018: Audible Progress!

Well, after three months of hacking, I’m very happy to have my low latency C++ streaming audio library in a genuinely working state:

https://github.com/RobJellinghaus/NowSound/

It comes with both a little live looping demo UWP app and a fairly complete data structure unit test library, even.

I am genuinely surprised at how modern C++ is actually becoming a reasonable language for Windows programming. The astoundingly good C++/WinRT library was indispensable for writing this. co_await now makes multithreaded C++ as pleasant as “await” in C#. Modern C++ ownership idioms (std::move, std::unique_ptr) mean that memory management is clean, correct, and efficient. I enjoyed this entire project, and I never, ever thought I would say that about writing C++.

There is much more to do with this library, and I will be doing it. I will probably create a “dev” branch for my personal work. Feel free to fork; this is MIT licensed as I am happy to have people use it as example code, etc.

If you have an interest in low latency audio on Windows 10 UWP, I hope this is helpful to you, and please drop me a line. I will certainly be interested in any pull requests, though I will need to make sure all tests still pass and all apps still work, and I will want the code style to be preserved. No delete statements allowed!

Now, finally, I can get back to work on Holofunk itself… with about 100x the audio horsepower under the hood. Can’t freaking wait.

Big Ol’ 2017 Mega-Update: Progress, Almost Entirely Invisible

Well! Certainly has been a while since my last post here. In fact, I learned an important lesson: don’t go a whole year between blog posts. Because if you do, you might overlook the fact that your domain expired, because your spam filter ate all the emails from your registrar, and your domain might get taken over by a domain squatter that forges content in your name!

That’s why I now heartily welcome you to robjsoftware.info — probably a better domain name altogether, come to think of it.

So: this post is all about my Holofunk project. If you have no idea what that is, please check it out and then come on back and get ready for a big old geek fest. Sorry, this is pretty tech-heavy, no videos or cool pictures in here 😦

What Just Happened

Despite the complete lack of activity here, things have in fact been happening in Holofunk land. I posted an update on Facebook in the middle of the year, so let me just crib from that July 14th post:

Gggggghhhhhh I have been trying to resist saying anything, but I can’t. I am too excited SQUEEEEEEEEE

So, many of you know my Holofunk project. I’ve gone completely dark on it since last fall when I realized I had to rewrite it, as it only ran on one computer (driver problems elsewhere), and it was only in 2D.

With the help of various open source collaborators, I’ve beavered away since January. Last night I finally got the core systems working again, and the rewritten version made its first music!!!

I’ve completely switched audiovisual technologies. It’s now a 3D Unity app, using the AudioGraph APIs in Windows 10. It still uses the Kinect, but soon it will also support Windows Holographic. And soon after that, networked multi-user holographic performance, rendered for the audience by Kinect. Unity makes an enormous number of new possibilities relatively easy, so the sky is even less of a limit now.

After six years on this project I have learned to expect the unexpected, so no deadlines or dates! But the path is clear and I’ve had good support so far, so it’s full speed ahead.

Hopefully by fall I’ll have an alpha test version on the Windows Store, and soon thereafter, start performing with it again — and really finding collaborators, this time!

So immediately after that summer vacation ate the rest of the summer, and then in September I implemented a gesture-controlled GUI with buttons you could reach out and grab via Kinect hand detection. (I learned the meaning of the term “diegetic” in the process. I love this project for things like that.) The whole goal here was to build an audio setup interface in the app itself, to avoid having some kind of clunky external “configurator” app or something.

And then… things broke. The latest version of Windows 10 somehow hosed the Universal Windows Platform (“UWP”) version of my Kinect+Unity app; it would freeze after less than a second of color video. My little Intel mini-PC totally failed to handle the new Unity version of Holofunk; the audio was terrible. And my old audio interface wouldn’t work with AudioGraph; it said the input device was always busy.

Basically, just when I was raring to go with my new GUI, I suddenly found myself with a bunch of busted hardware, and nothing working again. It actually crushed my morale quite seriously. So I took some time off in October and November to recharge.

What’s Happening Now

I am looking at where I am and realizing a few things. Regarding Kinect:

- The Kinect is dead — Microsoft has stopped making it. So any problems I run into trying to make a UWP Kinect app are unlikely to get any support.

- Therefore I should back away from UWP when it comes to Kinect.

- (Note that I am not going to back away from Kinect itself. Nothing else can do what it does, and it’s indispensable to the performance experience I’m creating. In fact I just bought two more to make sure I have backups!)

- The PC desktop version of my app seems to run just fine with Kinect — none of the UWP problems exist.

Regarding audio:

- Holofunk has always had problems with scaling up audio — using C# for audio handling is fundamentally a bad fit. Audio is the most latency-critical part of the system, and garbage collection jitter can be a disaster.

- The VST plugin standard is ubiquitous in the electronic music world, and Holofunk needs to have access to that ecosystem; but UWP apps can’t include random VST DLLs.

- I need an audio engine that is not written in managed code, and that can work on both UWP / HoloLens devices and on the PC, especially because it needs to support VST plugins on the PC.

Putting all these things together, I have realized that I really need to build two apps, or one app that can ship in two forms:

- A desktop Unity app that:

- runs on Windows desktop

- uses the Mono runtime

- supports Kinect

- supports external USB audio interfaces

- has a C++ audio engine

- can load and run VST plugins

- can detect hand poses and handle UI interaction

- can send body data and sound data over the network to the HoloLens app

- A HoloLens UWP Unity app that:

- runs on UWP devices (especially HoloLens)

- uses the CLR / .NET runtime

- does not support Kinect, but does support mixed reality

- has a C++ audio engine (if running standalone, with local audio processing)

- cannot load and run VST plugins

- can receive body data and sound data over the network from the Kinect/PC app

With these available, the performance setup will be:

- Performer on stage:

- wearing a HoloLens running the UWP app

- The UWP app will be rendering body/sound data sent by the Kinect PC, rendering the UI locally

- holding a microphone which broadcasts to the Kinect PC

- wearing a HoloLens running the UWP app

- Kinect PC on stage watching performer:

- running the PC app

- handling all audio processing (receiving all microphone inputs, feeding sound to the room)

- handling all gesture processing (using Kinect hand pose detection)

- providing video output which feeds a projector viewed by the audience

- sending sound and body pose data over the network to the HoloLens

The result will be that the Kinect PC is really mostly driving the show, but the performer nonetheless gets to immersively inhabit the performance rendered by their HoloLens.

Or, if there is no performance going on, the whole app could just run with all audio processing on the HoloLens… the only limitation would be no VST support.

Or, if the HoloLens version isn’t even done yet, two people could have all kinds of fun with just the Kinect PC app.

So all the above is the plan for 2018: manifest this massive multi-machine music modality.

But First

Observant readers will have noticed that both the apps posited above need a C++ audio engine. And after looking around I’ve concluded that there is no such audio engine that suits my requirements, which are:

- MINIMAL LATENCY

- supports both UWP apps and desktop apps

- focused on in-memory buffering

- VST support

So I have decided to write my own. That’s right: the next key piece of Holofunk’s development is implementing a C++ sound engine. From my investigation to date it looks like the right sound API to use is Windows WASAPI. (AudioGraph is managed only, and the ASIO standard is not supported for UWP apps.)

So between now and the end of 2017 I am going to put together the smallest, lightest, most minimal sound engine I possibly can, and hook up the PC build of Holofunk to it. If I get that working, I will add VST support. Also, this whole sub-project will be open sourced on GitHub, because there’s really nothing Holofunk-specific about it.

If anyone out there knows WASAPI and/or wants to get involved in an open source Windows-ecosystem C++ sound engine with VST support, let me know — I need beta testers and code reviewers for this piece! (And if anyone knows of an open source sound engine that meets these requirements already, let me know as well; I’d be happy to save some work!)

Once this is done and re-integrated with some more UI, then I’ll have a 3D Unity Kinect version of Holofunk that is basically at the functionality level of Holofunk circa 2015, only with Unity and WASAPI powering it.

At that point I’ll probably start working on networking with HoloLens, because that’s the future, baby.

Bits From My Git

If you’ve been reading this blog for a long time, you have the patience of a saint, and you would be right to wonder whether after all these years (years?!?!) there is really anything actually going on here. I mean, how many times can I rewrite this freaking project? And how many technologies are going to crumble out from under me in the process? And when am I going to have some more damned demos and fun jams like in the olden days???

I feel your pain. But it’s still gonna be a while yet. I do believe that once I get the bare-bones audio working, I will start jamming around with people, and I sure would love for that to happen in January 2018 or so. Or February 2018 at the latest. Not really too far off.

But in the meantime, I can at least throw you this bit of evidence that I really am doing something, honest. Here is my git commit log from this year, since creating my new repository for the Unity version of my app. The Kinect Unity plugin I’m using is excellent, but it’s not open source, so I’m no longer using a public repository. (For those, if any, still following along without being coders: these messages are my notes to myself whenever I make a change I want to keep in my main source code.)

So here’s the result of git log –pretty=format:”%ad %s” –date=simple run against my private repository. Prepare for a glimpse far too deep into my late night machinations and ululations. Yes, I use too many exclamation points when I am even slightly excited. And… keep the faith, as I now go off to hack C++ sound APIs deep into the long winter nights….

- 2017-12-02 UWP version no bueno con Kinect sur Win10 1709. But PC version… looking very delightful! Time to go back to the future.

- 2017-11-24 Pick up just a couple other bits

- 2017-11-24 Update to Unity 2017.2.f2 (or thereabouts) and latest (and probably final) K2-Asset. AND, with Tascam 2×2 and non-pre-release Kinect, all foundation techs work on my main home PC again!!!

- 2017-09-30 Welp, audio graph finally gets set up on NUC… and audio is hideous, unrecognizable, distorted, broken. F M L.

- 2017-09-30 Debug simple issues in audio setup UI,. Good: shows actual inputs & outputs, gets through CreateAudioGraphAsync! Bad: hits weird COM exception immediately after, blows up, leaves machine in weird state with lots of WinRT exceptions.

- 2017-09-28 Woot! The entire GUI flow actually works end to end!

- 2017-09-25 Woohoo!!! Stateful text field input buttons working together with GUI state machine and hand state capture functioning properly!!!!! NOYYYCE

- 2017-09-25 Text fields and OK buttons sort of kind of work properly now. But only the *first* OK button is OK. The second one, not so much. DEBUGGING TIME but first DINNER.

- 2017-09-25 Work in progress.

- 2017-09-24 Handedness detection works and carries state properly through tutorial. BOO && YA.

- 2017-09-24 Getting GUI state machine dialed in (instantiated rather than static; tracks which hand the user’s working with in tutorial). Latest code not tested yet, hit sleep timeout.

- 2017-09-22 Color and skeletal data working OK on NUC in release x64!!! Looks like unblocked?! ONWARDS ONCE AGAIN and maybe OK perf on Surfaces….

- 2017-09-22 Sigh… try disabling color map, user map, and background removal… still no joy on main PC. Let’s try on NUC.

- 2017-09-20 Latest local steps towards actual audio device selection.

- 2017-09-12 Start ACTUALLY IMPLEMENTING AUDIO INPUT/OUTPUT DEVICE SELECTION.

- 2017-09-10 Fix regression with hand events getting fired repeatedly; make sure OK button actually works! (and it does! And so does latest Windows insider release, with Kinect & Unity! PHEW.)

- 2017-09-10 Make the OK button do something!

- 2017-09-05 Actually got the CanvasTextButton working properly, sorting out all types of bugs along the way. Moral: when multiple hands can touch, having “isTouched” be per-update state, set idempotently by possibly multiple hands, is a good pattern. If it doesn’t scale, we’ll optimize it later.

- 2017-09-04 Theoretically got things wired up to support OK button capturing input from hand state machine. Tomorrow, will verify.

- 2017-09-04 Darn problem where adding source files to the UWP project puts them in the UWP generated sources directory and then they never get checked in :-O

- 2017-09-03 Don’t forget the scene changes

- 2017-09-03 Moving towards a real-ish event architecture. Already managed to unify “Loopie touching” and “Canvas UI element touching” so that’s a good win already 😀

- 2017-09-03 Semitransparent bones, OK-button background highlight.

- 2017-08-31 DIEGETIC OK BUTTON REACTS!!!!! In a terribly hacky way, but still IT WORKS! GraphicRayCaster does the Right Thing.

- 2017-08-30 Skeletal joint position averaging, helps a lot. Still very smooth. Working towards GUI position interception.

- 2017-08-28 Woot! New tutorial steps worked first time! Closed hand image works! Diegetic UI positioning works! Not sure what happened to damn GUIController component, but easy enough to sort out 🙂

- 2017-08-28 Add closed hand image and tutorial step for it. Start moving towards interactive OK button.

- 2017-08-06 Upgrade to Unity 2017.1, only it works! THANK YOU RUMEN!

- 2017-08-05 Guarded transitions! Tutorial interactions! Interaction states! Things are coming along nicely 🙂

- 2017-08-05 Fading and GUI state transitioning works!

- 2017-08-05 Welp, Unity 2017.1 borks UWP initialization of Kinect. Looks potentially like an async deadlock involving Wait loop in KinectManager? Anyway, UWP-mocking design means I’M NOT BLOCKED and can work on tutorial!!!

- 2017-07-16 Fix deletion bug — made Destroy() separate from Delete() but forgot to update the call site 😛

- 2017-07-15 YESSSSSS MUTING UNMUTING DELETING ALL WORK AGAIN. soooooooo simple and easy…… ahhhhhhhhhhh

- 2017-07-15 Good ol’ “while (samplesRemaining > 0) …” loop is back and working as great as ever 😀 Thanks, past me!

- 2017-07-14 !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

- 2017-07-13 Reviving the diagnostics to sort out the audio timing issues. WILL SORT IT ALL, this is all familiar stuff from five years ago 😀

- 2017-07-12 O M F G — I JUST HEARD SOMETHING!!!!!!!!!!!!!!!!!!!!

- 2017-07-10 …oops, forgot to save before pushing

- 2017-07-10 Getting closer… now running into some confusion about the exact time when shutting the recorded stream. BIG progress though!

- 2017-07-10 Oh yeah, HolofunkAudioTrack.BeatPositionNow is a thing and UWP-audio tracks handle time properly. NOW FOR ACTUAL PLAYBACK

- 2017-07-09 HUGE step forward! AudioGraph initialization working AND not totally breaking Unity & Kinect on UWP!!!!!

- 2017-07-07 Wow. Actual audio code in Holofunk2! Lots of TODOs still, and too tired to finish tonight, but it’s mostly there now 😀

- 2017-07-05 Fix a few issues in UWP version — need to sort out what is up with ThreadContract & Unity thread…

- 2017-07-03 OH GOODNESS now tracks handle FinishRecording properly. IT IS ALL SOOOO LOVELY.

- 2017-07-03 DA BEAT MEASURE IS BACK IN DA HOOOOOUSE

- 2017-07-03 Good progress towards having multiple beat-measure doodads per loop. Now just needs debugging.

- 2017-07-01 Progress towards BeatMeasures for loopies! Got the scene graph right… now just need to write the code to set all the colors properly.

- 2017-07-01 Toddle along towards Tracks keeping their duration.

- 2017-06-30 First taste of thread contracts. Don’t draw bones to untracked joints. Don’t add Unity C# files in UWP solution :-\

- 2017-06-30 The clock actually DOES something now!

- 2017-06-30 OK fine, the clock is a singleton already. Emulate Unity in this regard.

- 2017-06-30 Got multiple colors in and working, and a proto-clock, and tucked the bones away nicely where they belong. Now to have groovy boppin’ shapes!

- 2017-06-28 Semi-transparent loopies for the big win!

- 2017-06-28 WOW! Got translucent bone skeleton working with all my own rotated 3D axis/angle cylinders and camera-aligned positions and EVERYTHING!!!!!

- 2017-06-25 Forgot to save good old .csproj 😛

- 2017-06-25 Half-baked state… working towards having the right upcall interfaces and locking stories. Old code got rid of inter-thread queue, it seems, instead opting for direct locking! Woddya know. Will try do to likewise, with much use of ThreadContract.

- 2017-06-25 OUTSTANDING. State machine seems solid, ready for sound!!!

- 2017-06-19 Rebuild UWP solution to take it on the road to Idaho!

- 2017-06-17 HELLS YAAAAA — the INTERACTION ARMED/RECORD/POINTING/MUTE/UNMUTE STATE MACHINE LIVES AGAIN BAYBEEEEEE 2017-06-17 YEEEEEEHAAAAAW mute and unmute WORKS!!!!!

- 2017-06-16 WOOOOO I can TOUCH LOOPIES AGAIN!!! Figured out how to set material colors, got loopie touch updating working, used the tip about setting the scene update ordering… AWWWW YISSSSS

- 2017-06-14 3D objects being created!!!!! 2017-06-10 Just like that, mute/unmute revived with proper icons and interaction. Ahhhh. FINALLY.

- 2017-06-09 WOOOO got state machine working and revived game object stack and got sprites instantiating and BASIC HAND STATE MACHINE IS BACK!!!!!!

- 2017-06-04 Added hand-pose smoothing back in. Instant, dramatic improvement, just as expected! Better structure than last version, too.

- 2017-06-03 Got per-hand state machines back! But MAN, hand pose is noisy noisy NOISY. Did I really average it out before? Have to do that again it looks like… and also have to sort out how the heck the Unknown transitions are working, because that’s FLAKY. But… tomorrow!

- 2017-06-03 WOOT got multiple players and properly factored components and WEEEEE!!!!!

- 2017-06-03 Starting to work out the right Unity structure for a player and their components.

- 2017-05-25 OH YEAH! Sprites working for both hands, no rotation, correct Z order!!!!! WOOOOOO

- 2017-05-23 Moving to BackgroundRemoval + JointOverlay.

- 2017-05-18 Move Core and StateMachine down into Unity-land. Import Skinner and play around with it — scale and translation issues, but definitely cool anyway.

- 2017-05-18 Bring in StateMachine and AudioGraph libraries, or at least the vestiges thereof. No idea why project now has all kinds of NuGet complaints though…

- 2017-05-17 Background removal + avatar = AWESOMESAUCE!!!!!!!!!

- 2017-05-16 Move to Unity 5.6.1f1 and it indeed fixes the crash on quit/alt-tab! Thanks again Rumen for the heads up on that one!

- 2017-05-13 ACTUALLY GET CORE UWP LIBRARY WORKING (modulo build griping about version mismatch issues) WITH UNITY, KINECT, & UWP. ***finally***. NO GOING BACK NOW DANGIT

- 2017-05-13 Unbreak things *again* — upgrade to Kinect/Unity v2.13, and confirm UWP app actually works. Now to see if scene changes saved properly….

- 2017-05-09 OK, after fixing local Unity installation (5.6 upgrade, but not latest Kinect/Unity assets), UWP Kinect Unity app once again ACTUALLY RUNS. Now not going to MESS with it for SOME TIME.

- 2017-05-08 Whoops, can’t import non-UWP assemblies into UWP app. So, converted Core and need to convert StateMachine to UWP style. But let’s move this over on git first.

- 2017-05-03 Get Core and StateMachine back in there and compiling again. Exclude binaries and remove packages.config that was pulling in dear departed SharpDX.

- 2017-05-03 Try again to see what is some kind of reasonable Unity configuration to check in Hopefully visible metadata and text format for assets will help.

- 2017-04-18 .gitignore file from https://github.com/github/gitignore/blob/master/ Unity.gitignore

The Future Is Further Than You Think

I failed to give a demo to Thomas Dolby tomorrow. He’s visiting Microsoft, but my amazing music project Holofunk is currently not working, so I’ve missed a chance to show it to one of my heroes. (I banged on it last night and it wouldn’t go, and I’ve learned from bitter experience that if it ain’t working 48 hours before go time, it’s time to bail.)

And therein lies a tale. If I can’t have the fun with Mr. Dolby, I can at least step back and think about exactly why not, and share that with you all, many of whom have been my supporters and fans on this project for quite a few years. Sit back and enjoy, this is going to be a bit long.

Holofunk: Amazing, yet Broken

Holofunk at its best is a pretty awesome wave-your-hands-and-sing-and-improvise-some-surprising-music experience. I’ve always wanted to try to get it out into the world and have lots of people play with it.

But it uses the Kinect. The Kinect is absolutely key to the whole experience. It’s what sees you, and knows what you’re gesturing, and knows how to record video of you and not the background. It’s a magic piece of hardware that does things nothing else can do for the price.

And the Kinect is a failure. As astonishing as it is, it is still not quite good enough to be really reliable. Holofunk has always had plenty of glitches and finicky interactions because of it, and really rapid motions (like video games tend to want you to make) confuse it. So it just hasn’t succeeded in the marketplace… there are almost no new games or indeed any software at all that use it. It doesn’t fully work with modern Windows 10 applications, and it’s not clear when it will.

Moreover, Holofunk also uses an audio interface standard called ASIO, that is actually quite old. Support for it varies widely in quality. In fact, support for sound hardware in general varies widely in quality… my current miseries are because my original audio interface (a Focusrite Scarlett 6i6) got bricked by a bad firmware upgrade, with tech support apparently unable to help; and an attempted replacement, a TASCAM US-2×2, has buggy drivers that blue-screen when I run my heavily multi-threaded, USB-intensive application.

So the bottom line here is: Holofunk was always more technically precarious than I ever realized. It’s probably kind of a miracle that I got it working as well as I did. In the two-plus years since my last post, I actually did quite a lot with it:

- Took it to a weekend rave in California, and got to play it with many of my old school techno friends from the nineties.

- Demonstrated it at the Seattle Mini Maker Faire, resulting in the video on holofunk.com.

- Got invited to the 2015 NW Loopfest, and did performances with it and a whole lineup of other loopers in Seattle, Portland, and Lincoln City.

- Played it at an underground art event sponsored by local luminary Esse Quam Videri, even getting people dancing with it for the first time!

But then Windows 10 was released, and I upgraded to it, and it broke a bunch of code in my graphics layer. I don’t hold this against Microsoft — I work for Microsoft in the Windows org, and I know that as hard as Microsoft works to keep backwards compatibility, there are just a lot of technologies that simply get old. So after some prolonged inertia in the first half of 2016, I finally managed to get the graphics fixed up… but then my audio hardware problems started.

It’s now clear to me that:

- Holofunk is an awesome and futuristic experience that really does represent a new way to improvise music.

- But it’s based on technologies that are fragile and/or obsolescing.

- So in its current form, I need to realize that it’s basically my baby, and that no one else is realistically going to get their hands on it.

- Nonetheless, it is a genuine glimpse of the future.

And all of this leads me to the second half of this post: I realized this morning that Holofunk is turning out to be a microcosm of my whole career at Microsoft.

Inventing The Future Is Only The Beginning

It’s often said about art that it cannot exist without an audience. That is, art is a relationship between creator and audience.

I have learned over my career that a similar truth holds for technology. Invention is only the beginning. Technology transfer — having people use what you’ve invented — is in some ways an even harder problem than invention.

I got hired into Microsoft to work on the Midori technical incubation project. I started in 2008, and we beavered away on an entirely new operating system (microkernel right up to web browser) for seven years, making staggering amounts of progress. We learned what was possible in a type-safe language with no compromises to either safety or performance.

We got a glimpse of the future.

But ultimately the piper had to be paid, and finally it was time to try to transfer all this into Windows… and the gap between what was there already, and what we had created, was unbridgeable. It’s not enough to make something new and wonderful. You have to be able to use that new wonderful thing together with all the old non-wonderful stuff, because technology changes slowly and piece by piece (at least if you are in a world where your existing customers matter, as Microsoft very, very much is).

So now I am working on a team that is taking Midori insights and applying them incrementally, in ways that make a difference here and now. It’s extremely satisfying work, since even though in some sense we’re reinventing the wheel, this time we get to attach it to an actual car that’s already driving down the road! Shipping code routinely into real products is a lot more satisfying than working in isolation on something you’re unsure will actually get used.

The critical part here is that we have confidence in what we’re doing, because we know what is possible. The invaluable Midori glimpse of the future has given us insights that we can leverage everywhere. This is not only true for our team; the Core CLR team is working on features in .NET that are based entirely on Midori experience, for example Span<T> for accessing arbitrary memory with maximal efficiency.

So even though it is going to take a lot longer than we originally thought, the ideas are living on and making it out into the world.

Holofunk in Metamorphosis

Microsoft has learned the lessons of the Kinect with its successor, HoloLens. The project lead on both Kinect and HoloLens is very clear that HoloLens is going to stay baking in the oven until it is irresistibly delicious. The Kinect launched far too soon, in his current view. It was a glimpse of the future, and it gave confidence in what was possible, but the world — and all the software that would make its potential clear — was not ready.

So now I view Holofunk as part of the original Kinect experiment. Holofunk in its current form may never run very well (or even at all) for anyone other than me, or on anything other than the finicky hardware I hand-pick.

But now that I’ve admitted this to myself, I am starting to have a bajillion ideas for how the overall concepts can be reworked into much more accessible forms. For instance:

- Why have the Kinect at all? The core interaction in Holofunk is “grab to record, let go to start looping.” So why not make a touchscreen looper that lets you just touch the screen to record, and stop touching to start looping?

- Moreover, why not make it a Universal Windows Platform application? If I can get that working without the ASIO dependency, suddenly anyone with a touch-screen Windows device can run it.

- I can also port it to HoloLens. I can bring Unity or some other HoloLens-friendly 3D engine in, so now I will be free of the graphics-layer issues and I’ll have something that’ll work on HoloLens out of the box.

- I can start on support for networking, so multiple people could share the same touch-screen “sound space” across multiple devices. I really would need this for HoloLens, as part of the Holofunk concept has always been that an audience can see what you are doing, but HoloLens will have no external video connections for a projector or anything. So a separate computer (perhaps with a Kinect!) will need to be involved to run the projector, and that means networking.

All of these ideas are much, much more feasible given my existing Holofunk code (which has a bunch of helpful stuff for managing streams of multimedia data over time), and my Holofunk design experience (which has taught me more about gestural interface design than many people have ever learned, all of which will be immediately applicable to a HoloLens version).

I’ve had a glimpse of the future. It’s a fragile glimpse, and one which I can’t readily share. But now that I’ve accepted that, I can look towards the next versions, which if nothing else will be much easier for the rest of the world to play with.

Holofunk is going into a cocoon, and what emerges is going to be something quite different.

Thanks to everyone who’s enjoyed and supported me in this project so far — it’s brought me many new friends and moments of genuine musical and technical joy. I look forward to what is next… no matter how long it takes!

To John Carmack: Ship Gamer Goggles First, Then Put Faces And Bodies Into Cyberspace

John Carmack, CTO of Oculus Rift, tweeted:

Everyone has had some time to digest the FB deal now. I think it is going to be positive, but clearly many disagree. Much of the ranting has been emotional or tribal, but I am interested in reading coherent viewpoints about objective outcomes. What are the hazards? What should be done to guard against them? What are the tests for failure? Blog and I’ll read.

I have already blogged on this but will make this a more focused response to John specifically.

Here are my objective premises:

- VR goggles, as currently implemented by the Rift, conceal the face and prevent full optical facial / eye capture.

- VR goggles, ACIBTR, conceal the external environment — in other words, they are VR, not AR.

- Real-time person-to-person social contact is primarily based on nonverbal (especially facial) expression.

- Gamer-style “alternate reality” experiences are not primarily social, and are based on ignoring the external environment.

Here are my conclusions:

- A truly immersive social virtual environment must integrate accurate, low-latency, detailed facial and body capture.

- Therefore such an environment can’t be fundamentally based on opaque VR goggles, and will require new technologies and sensor integrations.

- Opaque VR goggles are, however, ideal for gamer-style experiences.

- Gamer experiences have never had full facial/body capture, and are based on ignoring the external environment.

- The more immersive such experiences are, the more people will want to participate in them.

- This means that gratuitous mandatory social features, in otherwise unrelated VR experiences, would fundamentally break that immersion and would damage the platform substantially.

- Goggle research and development will mostly directly benefit “post-goggle” augmented reality technology.

The hazards for Rift:

- If Facebook’s monetization strategy results in mandatory encounters with Facebook as part of all Rift experiences, this could break the primary thing that makes Rift compelling: convincing immersion in another reality that doesn’t much overlap with this one.

- If Facebook tries to build an “online social environment” with Rift as we have historically known them (Second Life, Google Lively, PlayStation Home, Worlds Inc., etc., etc., etc.), it will be as niche as all those others. Most importantly, it will radically fail to achieve Facebook’s ubiquity ambitions.

- This is because true socializing requires full facial and nonverbal bandwidth, and Rift today cannot provide that. Nor can any VR/AR technology yet created, but that’s the research challenge here!

- If Facebook and Rift fail to pioneer the innovation necessary to deliver true augmented social reality (including controlled perception of your actual environment, and full facial and body capture of all virtual world participants), some other company will get there first.

- That other company, and not Facebook, will truly own the future of cyberspace.

- If Rift fails to initially deliver a deeply immersive alternate reality platform, it will not get developers to buy in.

- This risk seems smallest based on Rift’s technical trajectory.

What should be done to guard against them:

- Facebook integration should be very easy as part of the Rift platform, but must be 100% completely developer opt-in. Any mandatory Facebook integration will damage your long-term goals (creating the first true social virtual world, requiring fundamentally new technology innovation) and will further lose you mindshare among those skeptical of Facebook.

- Facebook should resist the temptation to build a Rift-based virtual world. I know everyone there is itching to get started on Snow Crash, and you could certainly build a fantastic one. But it would still be fundamentally for gamers, because gamers are self-selected to enjoy surreal online places that happen to be inhabited by un-expressive avatars.

- The world has lots of such places already; they’re called MMOGs, and the MMOG developers can do a better job putting their games into Rift than Facebook can.

- Facebook and Rift should immediately begin a long-term research project dedicated to post-goggle technology. Goggles are not the endgame here; in a fully social cyberspace, you’ll be able to see everyone around you (including those physically next to you), faces, bodies, and all. If you really want to put your long-term money where your mouth is, shoot for the post-goggle moon.

- Retinal projection glasses? LCD projectors inside a pair of glasses? Ubiquitous depth cameras? Facial tracking cameras? Full environment capture? Whatever it takes to really get there, start on it immediately. This may take over a decade to finally pan out, but you have the resources to look ahead that far now. This, and nothing less, is what’s going to make VR/AR as ubiquitous as Facebook itself is today.

- Meanwhile, of course, ship a fantastic Rift that provides gamers — and technophiles generally — with a stunning experience they’ve never had before. Sell the hardware at just over cost. Brand it with Facebook if you like, but try to make your money back on some small flat fee of title revenue (5%? as with Unreal now?), so you get paid something reasonable whether the developer wants to integrate with Facebook or not.

Tests for failure:

- Mandatory Facebook integration for Rift causes developers to flee Rift platform before it ships.

- “FaceRift” virtual world launches; Second Life furries love it, rest of world laughs, yawns, moves on.

- Valve and Microsoft team up to launch “Holodeck” in 2020, combining AR glasses with six Kinect 3’s to provide a virtual world in which you can stand next to and see your actual friends; immediately sell billions, leaving Facebook as “that old web site.”

- Initial Rift titles make some people queasy and fail to impress the others; Rift fails to sell to every gamer with a PC and a relative lack of motion sickness.

John, you’ve changed the world several times already. You have the resources now to make the biggest impact yet, but it’s got to be both a short-term (Rift) and long-term (true social AR) play. Don’t get the two confused, and you can build the future of cyberspace. Good luck.

(And minor blast from the past: I interviewed you at the PGL Championships in San Francisco fifteen years ago. Cheers!)

My Take On Zuckey & Luckey: VR Goggles Are (Only) For Gamers

I am watching the whole “Facebook buys Oculus Rift” situation with great bemusement.

I worked for a cyberspace company — Electric Communities — in the mid-nineties, back in the first heady pre-dot-com nineties wave of Silicon Valley VC fun.

We were building a fully distributed cryptographically based virtual world called Microcosm. In Java 1.0. On the client. In 1995. We had drunk ALL THE KOOL-AID.

(Click that for source. For a scurrilous and inaccurate — but evocative — take on it all, read this.)

We actually got some significant parts of this working — you could host rooms and/or avatars and/or objects, and you could go from space to space using fully peer-to-peer communication. Because, you see, we envisioned that the only way to make a full cyberspace work was for it to NOT be centralized AT ALL. Instead, everyone would host their own little bits of it and they would all join together into an initially-2D-but-ultimately-3D place, with individual certificates on everything so everyone could take responsibility for their own stuff. Take that, Facebook!!!

(I still remember someone raving at the office during that job, about this new search engine called Google… the concept of “web scale” did not exist yet.)

The whole thing collapsed completely when it became clear that it was too slow, too resource-intensive, and not nearly monetizable enough. I met a few lifelong friends at that job though, quite a few who have gone on to great success elsewhere (Dalvik architect, Google ES6 spec team member, Facebook security guru…).

I also worked at Autodesk circa 1991, in the very very first era of VR goggles, back when they looked like this:

Look familiar? This was from 1989. Twenty-five frickin’ years ago.

So I have a pretty large immunity to VR Kool-Aid. I actually think that Facebook is likely to just about get their money back on this deal, but they won’t really change the world. More specifically, VR goggles in general will not really change the world.

VR goggles are a fundamentally bad way to foster interpersonal interaction, because they obscure your entire face, and make it impossible to see your expression. In other words, they block facial capture. This means that they are the exact worst thing possible for Facebook, since they make you faceless to an observer.

This then means that they are best for relatively solitary experiences that transport you away from where you are. This is why they are a great early-adopter technology for the gamer geeks of the world. We are precisely the people who have *already* done all we can to transport ourselves into relatively solitary (in terms of genuine, physical proximity) otherworldly experiences. So VR goggles are perfect for those of us who are already gamers. And they will find a somewhat larger market among people who want to experience this sort of thing (kind of like super-duper 3D TVs).

But in their current form they are never going to be the thing that makes cyberspace ubiquitous. In a full cyberspace, you will have to be able to look directly at someone else *whether they are physically adjacent or not*, and you will have to see them — including their full face, or whatever full facial mapping their avatar is using — directly. This implies some substantially different display technology — see-through AR goggles a la CastAR, or nanotech internally illuminated contact lenses, or retinally scanned holograms, or direct optical neural linkage. But strapping a pair of monitors to your eyeballs? Uh-uh. Always going to be a “let’s go to the movies / let’s hang in the living room playing games” experience; never ever going to be an “inhabit this ubiquitous cyber-world with all your friends” experience.

Maybe Zuckerberg and Luckey together actually have the vision to shepherd Oculus through this goggle period and into the final Really Immersive Cyberworld. But my guess is the pressures of making enough money to justify the deal will lead to various irritating wrongnesses. Still, I expect they will ship a really great Oculus product and I may even buy one if the games are cool enough… but there will be goggle competitors, and it’s best to think of ALL opaque goggle incarnations as gamer devices first and foremost.

So why did Zuckerberg do this deal? I think it’s simple: he has Sergey Brin envy. Google has its moon-shot projects (self-driving cars, humanoid robots, Google Glass). Zuckerberg wants a piece of that. It’s more interesting than the Facebook web site, and he is able to get his company to swing $2 billion on a side project, so why not? Plus he and Luckey are an epic mutual admiration society. That psychology alone is sufficient explanation. It does lead to the amusingly absurd paradox of Facebook spending $2 billion on something that hides users’ faces, but such is our industry, and such has it ever been.

Realistically, the jury is still out on whether Oculus would have been better off going it alone (retaining the love of their community and their pure gaming focus, but needing to raise more and more venture capital to ramp up production), or going with Facebook (no more worries about money, until Facebook’s ad-based business model starts to screw everything up). The former path might have cratered before launch, or succumbed to deeper-pocketed competitors. The latter path has every chance of going wrong — if Facebook handles things as they did their web gaming efforts, it definitely will. We will see whether Zuckerberg can keep Facebook’s hands off of Oculus or not. I am sadly not sanguine… on its face, this is a bad acquisition, since it does not at all play to the technology’s gaming strengths.

It’s worth noting Imogen Heap’s dataglove project on Kickstarter. I was skeptical that they would get funded, but their AMA on Reddit convinced me they are going about it the best way they can, and they have a clear vision for how the things should work. So now I say, more power to them! Go support them! They are definitely purely community-driven, the way Oculus was until yesterday….

A Publication! In Public!

The team I work on doesn’t get much publicity. (Yet.)

But recently some guys I work with submitted a paper to OOPSLA, and it was accepted, so it’s a rare chance for me to discuss it:

This is really groundbreaking work, in my opinion. Introducing “readable”, “writable”, “immutable”, and “isolated” into C# makes it a quite different experience.

When doing language design work like this, it’s hard to know whether the ideas really hold up. That’s one epic advantage of this particular team I’m on: we use what we write, at scale. From section 6 of the paper:

A source-level variant of this system, as an extension to C#, is in use by a large project at Microsoft, as their primary programming language. The group has written several million lines of code, including: core libraries (including collections with polymorphism over element permissions and data-parallel operations when safe), a webserver, a high level optimizing compiler, and an MPEG decoder. These and other applications written in the source language are performance-competitive with established implementations on standard benchmarks; we mention this not because our language design is focused on performance, but merely to point out that heavy use of reference immutability, including removing mutable static/global state, has not come at the cost of performance in the experience of the Microsoft team. In fact, the prototype compiler exploits reference immutability information for a number of otherwise-unavailable compiler optimizations….

Overall, the Microsoft team has been satisfied with the additional safety they gain from not only the general software engineering advantages of reference immutability… but particularly the safe parallelism. Anecdotally, they claim that the further they push reference immutability through their code base, the more bugs they find from spurious mutations. The main classes of bugs found are cases where a developer provided an object intended for read-only access, but a callee incorrectly mutated it; accidental mutations of structures that should be immutable; and data races where data should have been immutable or thread local (i.e. isolated, and one thread kept and used a stale reference).

It’s true, what they say there.

Here’s just a bit more:

The Microsoft team was surprisingly receptive to using explicit destructive reads, as opposed to richer flow-sensitive analyses (which also have non-trivial interaction with exceptions). They value the simplicity and predictability of destructive reads, and like that it makes the transfer of unique references explicit and easy to find. In general, the team preferred explicit source representation for type system interactions (e.g. consume, permission conversion).

The team has also naturally developed their own design patterns for working in this environment. One of the most popular is informally called the “builder pattern” (as in building a collection) to create frozen collections:

isolated List<Foo> list = new List<Foo>(); foreach (var cur in someOtherCollection) { isolated Foo f = new Foo(); f.Name = cur.Name; // etc ... list.Add(consume f); }

immutable List<Foo> immList = consume list;

This pattern can be further abstracted for elements with a deep clone method returning an isolated reference.

I firmly expect that eventually I will be able to share much more about what we’re doing. But if you have a high-performance systems background, and if the general concept of no-compromises performance plus managed safety is appealing, our team *is* hiring. Drop me a line!

SlimDX vs. SharpDX

Phew! Been very busy around here. The Holofunk Jam, mentioned last post, went very well — met a few talented local loopers who gave me invaluable hands-on advice. Demoed to the Kinect for Windows team and got some good feedback there. My sister has requested a Holofunk performance at her wedding in Boston near the end of August, and before that, the Microsoft Garage team has twisted my arm to give another public demo on August 16th. Plus I had my tenth wedding anniversary with my wife last weekend. Life is full, full, FULL! And I’m in no way whatsoever complaining.

Time To Put Up, Or Else To Shut Up

One piece of feedback I’ve gotten consistently is that darn near everyone is skeptical that this thing can really be useful for full-on performance. “It’s a fun Kinect-y toy,” many say, “but it needs a lot of work before you can take it on stage.” This is emerging as the central challenge of this project: can I get it to the point where I can credibly rock a room with it? If I personally can’t use it to funk out in an undeniable and audience-connected manner, it’s for damn sure no one else will be able to either.

So it’s time to focus on performance features for the software, and improved beatboxing and looping skills for me!

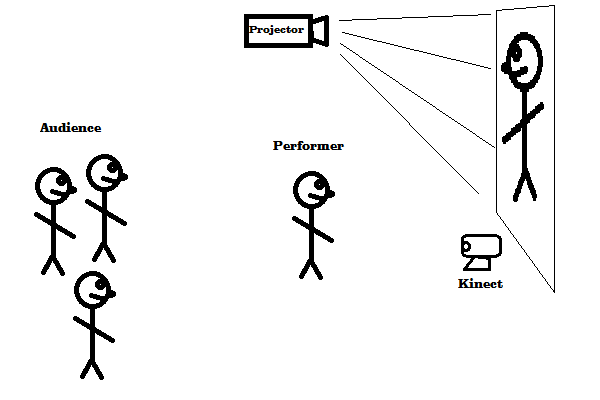

The number one performance feature it needs is dual monitor support. Right now, when you’re using Holofunk, you’re facing a screen on which your image is projected. The Kinect is under the screen, facing you, and the screen shows what the Kinect sees.

This is standard Kinect videogame setup — you are effectively looking at your mirrored video image, which moves as you do. It’s great… if you’re the only one playing.

But if you have an audience, then the audience is looking at your back, and you’re all (you and the audience) looking at the projected screen.

Like this — and BEHOLD MY PROGRAMMER ART!

No solo performer wants their back to the audience.

So what I need is dual screen support. I should be able to have Holofunk on my laptop. I face the audience; the laptop is between me and the audience, facing me; I’m watching the laptop screen and Holofunking on it. The Kinect is sitting by the laptop, and the laptop is putting out a mirror-reversed image for the projection screen behind me, which the audience is watching.

Like this:

With that setup, I can make eye contact with the audience while still driving Holofunk, and the audience can still see what I’m doing with Holofunk.

So, that’s the number one feature… probably the only major feature I’ll be adding before next month’s demos.

The question is, how?

XNA No More

Right now Holofunk uses the XNA C# graphics library from Microsoft. Problem is, this seems defunct; it is stuck on DirectX 9 (a several-year-old graphics API at this point), and there is no indication it will ever be made available for Windows 8 Metro.

I looked into porting Holofunk to C++. It was terrifying. I’ll be sticking with C#, thanks. But not only is XNA a dead end, it doesn’t support multiple displays! You get only one game window.

So I’ve got to switch sooner rather than later. The two big contenders in the C# world are SlimDX and SharpDX.

In a nutshell: SlimDX has been around for longer, and has significantly better documentation. SharpDX is more up-to-date (it already has Windows 8 support, unlike SlimDX), and is “closer to the metal” (it’s more consistently generated directly from the DirectX C++ API definitions).

As always in the open source world, one of the first things to check — beyond “do the samples compile?” and “is there any API documentation?” — is how many commits have been made recently to the projects’ source trees.

In the SlimDX case, there was a flurry of activity back in March, and since then there has been very little activity at all. In the SharpDX case, the developer is an animal and is frenetically committing almost every day.

SharpDX’s most recent release is from last month. SlimDX’s is from January.

Two of the main SlimDX developers have moved on (as explicitly stated in their blogs), and the third seems AWOL.

Finally, I found this thread about possible directions for SlimDX 2, and it doesn’t seem that anyone is actively carrying the torch.

So, SharpDX wins from a support perspective. The problem for me is, it looks like a lot of DirectX boilerplate compared to XNA.

I just, though, turned up a reference to this other project ANX — an XNA-compatible API wrapper around SharpDX. That looks just about perfect for me. So I will be investigating ANX on top of SharpDX first; if that falls through, I’ll go just with SharpDX alone.

This is daunting simply because it’s always a bit of a drag to switch to a new framework — they all have learning curves, and XNA’s was easy, but SharpDX’s won’t be. So I have to psych myself up for it a bit. The good news, though, is once I have a more modern API under the hood, I can start doing crazy things like realtime video recording and video texture playback… that’s a 2013 feature at the earliest, by the way 🙂

Holofunkarama

Life has been busy in Holofunk land! First, a new video:

While my singing needs work at one point, the overall concept is finally actually there: you can layer things in a reasonably tight way, and you can tweak your sounds in groups.

Holofunk Jam, June 23rd

I have no shortage of feature ideas, and I’m going to be hacking on this thing for the foreseeable future, but in the near term: on June 23rd I’m organizing a “Holofunk Jam” at the Seattle home of some very generous friends. I’m going to set up Holofunk, demo it, ask anyone & everyone to try it, and hopefully see various gadgets, loopers, etc. that people bring over. It would be amazing if it turned into a free-form electronica jam session of some kind! If this sounds interesting to you, drop me a line.

Demoing Holofunk

There have been two public Holofunk demos since my last post, both of them enjoyable and educational.

Microsoft had a Hardware Summit, including the “science fair” I mentioned in my last post. I wound up winning the “Golden Volcano” award in the Kinect category. GO ME! This in practice meant a small wooden laser-etched cube:

This was rather like coming in third out of about eight Kinect projects, which is actually not bad as the competition was quite impressive — e.g. an India team doing Kinect sign language recognition. The big lesson from this event: if someone is really interested in your project, don’t just give them your info, get their info too. I would love to follow up with some of the people who came, but they seem unfindable!

Then, last weekend, the Maker Faire did indeed happen — and shame on me for not updating this blog in realtime with it. I was picked as a presenter, and things went quite well, no mishaps to speak of. In fact, I opened with a little riff, aand when it ended I got spontaneous applause! Unexpected and appreciated. (They also applauded at the end.)

I videoed it, but did not record the PA system, which was a terrible failure on my part; all the camera picked up was the roar of the people hobnobbing around the booths in the presentation room. Still, it was a lot of fun and people seemed to like it.

My kids had a great time at the faire, too. Here they are watching (and hearing) a record player, for the very first time in their lives:

True children of the 21st century 🙂

Coming Soon

I’ll be making another source drop to http://holofunk.codeplex.com soon — trying to keep things up to date. And the next features on the list:

- effect selection / menuing

- panning

- volume

- reverb

- delay

- effect recording

- VST support

Well, maybe not that last one quite yet, but we’ll see. And of course practice, practice, practice!

Science fair time!

Holofunk has been externally hibernating since last September; first I took a few months off just on general principles, and since then I’ve been hacking on the down-low. In that time I’ve fixed Holofunk’s time sync issue (thanks again to the stupendous free support from the BASS Audio library guys). I’ve added a number of visual cues to help people follow what’s happening, including beat meters to show how many beats long each track is, and better track length setting — now tracks can only be 1, 2, or a multiple of 4 beats long, making it easy to line things up. Generally I’m in a very satisfying hacking groove now.

And today Holofunk re-emerges into the public eye — I’m demoing at a Microsoft internal event dubbed the Science Fair, coinciding with Microsoft’s annual Hardware Summit. Root for me to win a prize if you have any good karma to spare today 🙂 I’ll post again in a day or two with an update on how it went.

I’ve also applied to be a speaker at the Seattle Mini Maker Faire the first weekend in June — will find out about that within a week. If that happens, then I’ll spread the word as to exactly when I’ll be presenting!